Content Type

Pathways

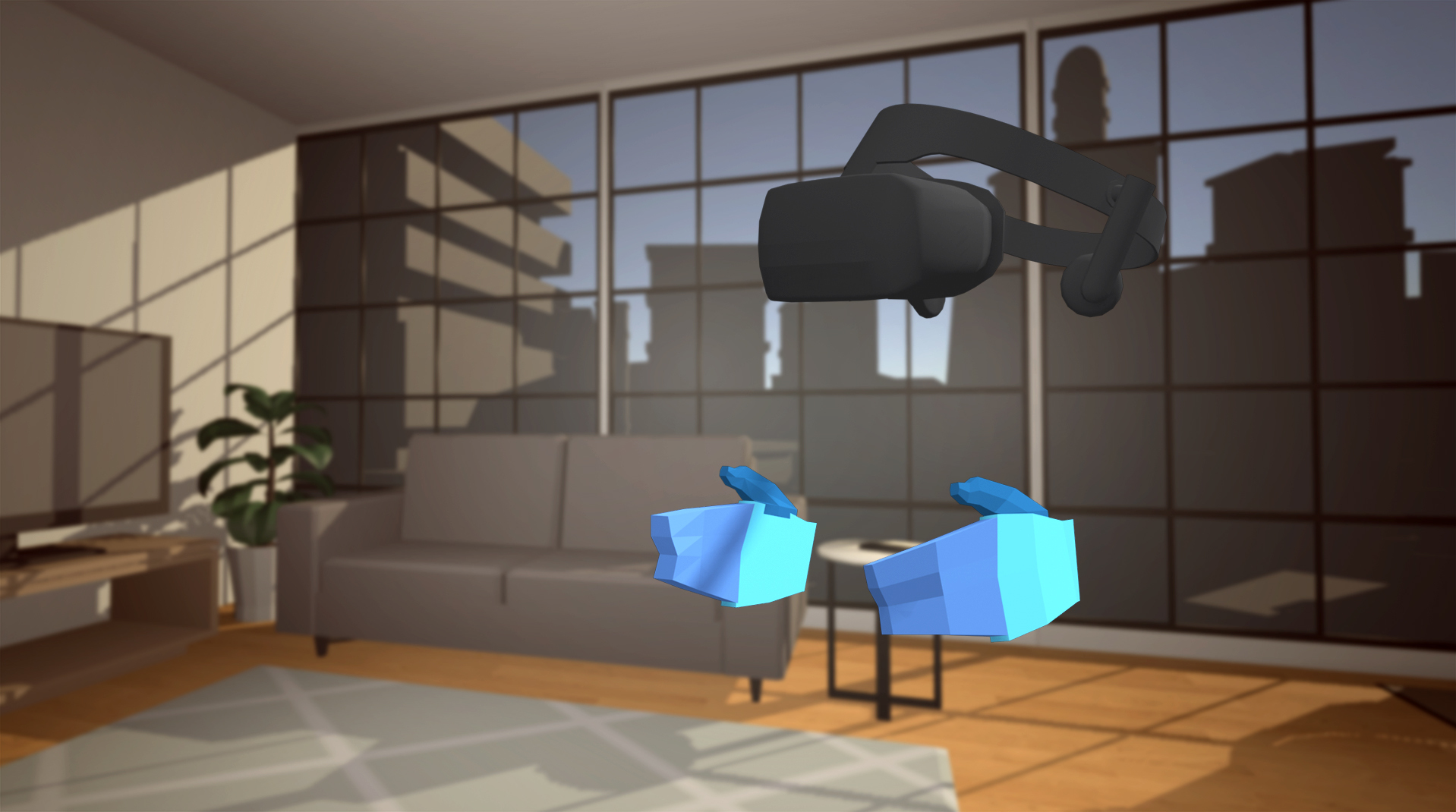

Build skills in Unity with guided learning pathways designed to help anyone interested in pursuing a career in gaming and the Real Time 3D Industry.

View all PathwaysCourses

Explore a topic in-depth through a combination of step-by-step tutorials and projects.

View all Courses